December 01, 2025

Details

Who: Jason Mohoney

Where: TMCB 1170

When: December 4th, 11 AM

Talk Title

The Mechanics of Vector Databases

Abstract

Vector databases are critical infrastructure underlying modern AI systems, enabling applications like semantic search and Retrieval-Augmented Generation (RAG). While the core concept of similarity search is well-understood, building indexes that perform well in practice presents unique challenges. This talk explores the mechanics of vector indexing, moving beyond basic approximate nearest neighbor algorithms to address real-world production needs. We will discuss the complexities of filtered vector search, where metadata constraints often break traditional indexing assumptions, and examine adaptive strategies for dynamic environments where data distributions constantly shift, optimizing index structures on the fly to balance latency and recall. We will conclude by highlighting open problems and future research directions in vector databases and indexing.

Biography

Jason Mohoney is a Postdoctoral Associate in the Data Systems Group at MIT, working with Prof. Tim Kraska. He completed his PhD at the University of Wisconsin-Madison under the supervision of Profs. Shivaram Venkataraman and Theodoros Rekatsinas. His doctoral research focused on building I/O-minimizing data systems, with a particular emphasis on efficient vector search indexing, a topic he also worked on during internships at Apple.

September 30, 2025

Where

TMCB 1170

When

September 30th | 2 PM - 3:15 PM

Biography

Jared M. Spataro, of Issaquah, Washington, serves as Chief Marketing Officer at Microsoft, where he leads the company’s AI at Work initiatives. For more than 15 years at Microsoft, he has been energized by the mission to empower every person and every organization on the planet to achieve more. In his current role, he helps organizations leverage AI to solve business challenges, reduce costs, and create new value. His team conducts research to anticipate and shape the future of work across industries while delivering innovative products and features within Copilot, Microsoft 365, Dynamics 365, and Power Platform.

Beyond his professional responsibilities, Jared finds his most important work in his faith and family life. Spataro was recently called as an Area Seventy over the North America Southwest and North America West Areas. He is currently the president of the Bellevue Washington Stake of The Church of Jesus Christ of Latter-day Saints and has previously served as a bishop and high councilor. He and his wife, Kimberly Ann, are the parents of four children. Jared describes his family and community as the foundation that inspires him to do better and be better in every aspect of his life.

September 22, 2025

Talk Title

Uncertainty Quantification and Reasoning for Reliable AI

Abstract

Despite the remarkable progress in machine learning, AI systems still struggle with uncertainty—manifesting as overconfident predictions, unreliable decisions, and vulnerabilities in safety-critical applications. Addressing these challenges is essential for deploying AI responsibly in real-world scenarios. In this talk, I will present my research on uncertainty quantification and reasoning, a foundational approach to making AI systems more reliable, interpretable, and safe. This talk focuses on three key aspects: (1) Uncertainty quantification and decomposition - analyzing Inherent uncertainties derived from different root causes, aiming to enhance the transparency and trustworthiness of AI systems. (2)Uncertainty Reasoning in Machine Learning: – developing a unified framework to assess and manage the uncertainties associated with AI predictions and decisions, improving model calibration, reliability, and risk assessment in critical safety domains. (3) Reliable Large Language Models (LLMs) – addressing reliability gaps in foundation models by exploring novel uncertainty metrics to mitigate issues such as hallucinations, inconsistencies, and decision-making blind spots. Through the development of fundamental methodologies, theoretical insights, and practical applications, this research contributes to the responsible deployment of AI technologies.

Biography

Dr. Xujiang Zhao is a Researcher at NEC Laboratories America. He received his Ph.D. in Computer Science Department at The University of Texas at Dallas in 2022. His research focuses on machine learning, NLP, and data mining, especially in Uncertainty Quantification and Reasoning, Large Language Models, Reinforcement Learning, Natural Language Processing, and Graph Neural Networks Dr. Zhao has published his work in top-tier machine learning and data mining conferences, including NeurIPS, ICLR, AAAI, ACL, NAACL, EMNLP and etc.. He has served on technical program committees for several high-impact venues, such as ICML, NeurIPS, ICLR, KDD, ARR, and AAAI. He also organized and chaired multiple workshops on topics of Uncertainty Quantification, Decision Making, and Trustworthy AI at KDD and AAAI.

September 18, 2025

Talk Title

Toward Trustworthy Machine Learning Models and More Actionable Decision-Making

Abstract

Large Language Models (LLMs) and reinforcement learning (RL) hold great promise for decision-making, but concerns about trustworthiness and real-world transfer remain. This talk introduces Topology-based Uncertainty Quantification (Topo-UQ), which models reasoning as topologies to reveal inconsistencies beyond answer-level uncertainty, and Prompt-to-Transfer (PromptGAT), which uses LLM prompts to bridge the Sim-to-Real gap in RL solutions for traffic signal control. Together, these works highlight a path from assessing when to trust AI systems to enabling their actionability in real-world settings.

Biography

Longchao is a fourth-year Ph.D. candidate at Arizona State University. His research interests are data mining, RL, and trustworthy AI. He has been working on the trustworthiness of the Machine Learning Models, and actionable decision makings, with publications in top venues such as NeurIPS, ICML, AAAI, KDD, CIKM, ECML-PKDD, Machine Learning, and SDM, etc. He has obtained 2025 Google Fellowship nomination, twice school fellowship award and SDM'25 best poster award.

September 09, 2025

Talk Title

I'll take the low road

Abstract

There seems to be a generally-accepted and expected path to success with a computer science degree. Get good grades, solve crazy interview puzzles, and land a cozy corporate job at a large software company.

But for some people that just sounds absolutely terrible. For some there is a different path: mediocre grades, extremely high curiosity, obsessive focus, startups, ideas...they prefer entering into the abyss with their destiny in their own hands.

That's the path I've embarked on. The low road. And it's led me through many open source projects, decentralized computing, blockchain, zero knowledge, fitting JavaScript and Python interpreters into WebAssembly, traveling the world, and more.

With an increasingly uncertain AI-powered future, what road will you take?

Biography

Jordan Last is the CEO of Demergent Labs. He's a rabid open source developer focused on blockchain, decentralized computing, and bringing TypeScript, JavaScript, and Python to a secure-by-default Wasm cloud environment known as the Internet Computer Protocol (ICP). BYU alumni since 2017.

May 08, 2025

Title: “Pragmatic Reinforcement Learning for Real-World Use”

Abstract: In order to develop practical machine learning aided technology for the benefit of human users, it is critical to anchor scientific research and development by the intended real-world use cases. In this talk, I introduce specific modeling decisions that can be made to develop actionable insights from sequentially observed healthcare data as well as in the development of robust self-driving behaviors via large-scale self-play. By leveraging the inherent structure of the Reinforcement Learning paradigm, we effectively identify behaviors to avoid while also allowing for the development of emergent capabilities. Together, these advances serve to establish a strong foundation from which continued technological progress will be made, several directions that I will outline as a hopeful conclusion of my talk.

April 28, 2025

Abstract: Natural language processing (NLP) and Machine Learning (ML) more generally have the potential to break down linguistic barriers worldwide, yet millions who speak low-resource languages remain underserved by current technologies. Drawing on past collaboration with colleagues conducting basic research and applied science, I present key technical contributions in active learning (including cost-conscious annotation), topic modeling, and personalization systems that have influenced both theoretical understanding and real-world applications. Building on these foundations, I introduce an ambitious research agenda focused on machine translation for low-resource languages, a domain where current deep learning approaches fall short due to data scarcity. I will present a systematic multi-year plan exploring transfer learning, data augmentation, and cross-lingual modeling to address this grand challenge. This work not only promises significant contributions to NLP but also aligns with broader societal goals of democratizing access to information across linguistic barriers. The research vision combines technical innovation with a commitment to mentoring the next generation of computer scientists capable of tackling increasingly complex AI challenges. Bio: Eric Ringger is a Computer Scientist innovating in the fields of Natural Language Processing and Machine Learning. The through-line of my research and applied science work has been to empower and augment people -- customers, partners, scholars -- with great automation built on NLP, ML, and AI. The other dominant focus of my career is mentoring and growing people in their learning and impact. I am passionate about computer science and devoted to helping people realize their amazing potential. Most recently I was focused on enabling Zillow Group to use machine learning and AI -- including large language models -- to empower customers in finding and landing in a great home and to empower business partners with automation and intelligent support, all backed by the right automation and data. At Zillow Group I also built, led, and supported 1. the team that personalizes your search for and discovery of a place to live, 2. the team that understands your goals in the housing domain, 3. the team that personalizes Zillow's communications, 4. the team that builds the experimentation platform for controlled randomized experiments at scale for Zillow Group, and 5. the customer-focused product analytics team. I have contributed to progress in Search Ranking as a research scientist at Facebook and to the fields of Natural Language Processing and Text Mining as a professor at Brigham Young University, as a research scientist at Microsoft Research, and as a Ph.D. student at the University of Rochester.

April 07, 2025

Details

Where: TMCB 1170

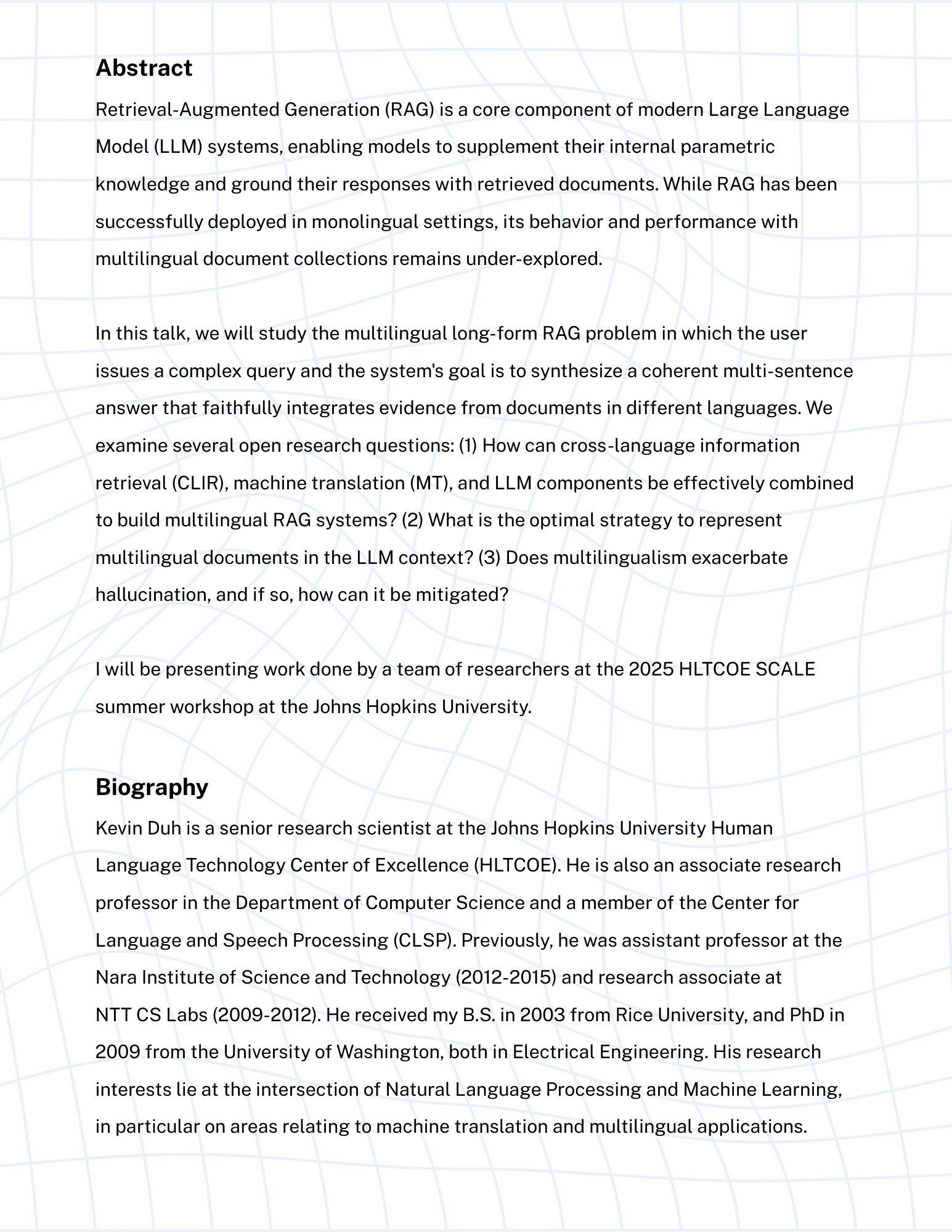

When: April 10th @ 11am

Speaker: Will Melville

Talk Title

Game-Theoretically Optimal Strategies in Baseball

Abstract

The purpose of our work is to define game-theoretically optimal strategies for every decision in a baseball game. We begin with a pitch sequencing model, which we use to define optimal swing decision strategies and pitch sequencing strategies. Next, we define a method to optimize fielder positioning against both static hitters who do not adapt their batted ball trajectories in response to the defense's positioning strategy and adaptable hitters who choose their batted ball trajectories in response to the defense's positioning strategy. Finally, we investigate managerial matchup decisions such as when to relieve a pitcher or pinch hit for a batter by modeling baseball as an extensive-form game. We define and evaluate five game playing algorithms, and we show that at least four of the algorithms could help actual baseball managers make winning decisions.

Biography

William Melville received his undergraduate degree in applied and computational mathematics (ACME) at BYU in 2020 before starting a job as an analyst with the Texas Rangers. He returned to BYU in 2022, where he is currently pursuing a PhD in computer science. His research focuses on applications of game theory to baseball strategy.

March 31, 2025

Details

Where: TMCB 1170

When: April 3rd

Speaker: Mark Transtrum & Gus Hart

Talk Title

eGAD! double descent is Explained by the Generalized Aliasing Decomposition

Abstract

A central problem in data science is to use potentially noisy samples of an unknown function to predict values for unseen inputs. Classically, predictive error is understood as a trade-off between bias and variance that balances model simplicity with its ability to fit complex functions. However, over-parameterized models exhibit counterintuitive behaviors, such as “double descent” in which models of increasing complexity exhibit decreasing generalization error. Other models may exhibit more complicated patterns of predictive error with multiple peaks and valleys. Neither double descent nor multiple descent phenomena are well explained by the bias–variance decomposition. I present the generalized aliasing decomposition (GAD) to explain the relationship between predictive performance and model complexity. The GAD decomposes the predictive error into three parts: 1.) model insufficiency, which dominates when the number of parameters is much smaller than the number of data points, 2.) data insufficiency, which dominates when the number of parameters is much greater than the number of data points, and 3.) generalized aliasing, which dominates between these two extremes. I apply the GAD to linear regression problems from machine learning and materials discovery to explain salient features of the generalization curves in the context of the data and model class.

Biography

Mark K. Transtrum received the Ph.D. degree in physics from Cornell University, Ithaca, NY, USA, in 2011. He then studied computational biology as a Postdoctoral Fellow from MD Anderson Cancer Center, Houston, TX, USA. Since 2013, he has been with Brigham Young University, Provo, UT, USA. He is currently an Associate Professor of physics and astronomy. His research works include representations of a variety of complex systems including power systems, systems biology, materials science, and neuroscience.

Gus Hart is a professor in the department of Physics and Astronomy. He came to BYU from Northern Arizona in 2006. He completed a PhD at UC Davis with Barry Klein in 1999 and a postdoctoral appointment at the National Renewable Energy Laboratory in 2001 with Alex Zunger.

Until 2022, Gus' research was computational materials physics where his focus was on alloy modeling, algorithm development, and aflowlib.org. He is the author of enumlib, symlib, and other open source codes. He is the primary developer of the commercial UNCLE cluster expansion code. Google scholar profile.

Since 2022, Gus' research focus has shifted to data science and computational biophysics, with a particular focus on developing AI for bacterial tomograms.

March 24, 2025

Details

Where: TMCB 1170

When: March 27th, @ 11 AM

Speakers: Student speakers

Abstract

Curious about what it’s like to network with industry leaders at companies like Amazon and Google? Join us for the Seattle Tech Trek Download, where BYU CS students will share their insights, key takeaways, and behind-the-scenes experiences from their trip. Whether you're looking for career advice, networking strategies, or just want to know what it’s like inside some of the world’s biggest tech companies, this is your chance to get the inside scoop! Don’t miss out on this opportunity to learn, ask questions, and get inspired for your own career journey.

March 13, 2025

Details

Where: TMCB 1170

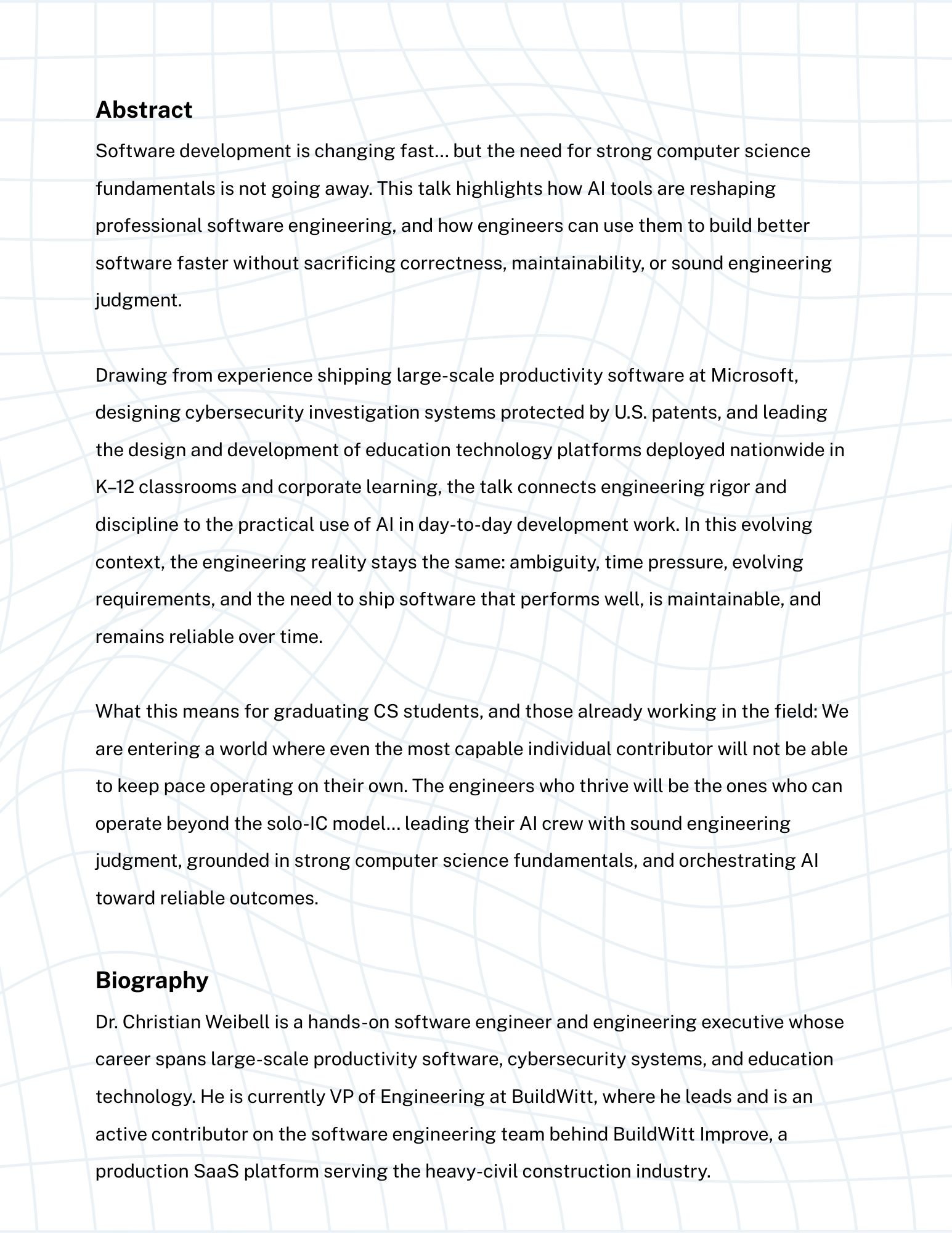

When: March 20th @ 11AM

Speaker: Jacob Sorber

Talk Title

Adventures in intermittent power and faith

Abstract

The Internet of Things has a battery problem. We simply can’t afford to recharge, replace, and dispose of trillions of batteries. Batteryless computing offers hope of a more sustainable future with devices that can be deployed maintenance-free for decades, but they are difficult to design, program, test, and deploy, due to frequent and unpredictable power failures. This talk will explore lessons learned from two decades of research on intermittently-powered systems and the transformative power of uncertainty and hope in the journeys of disciples and scholars.

Biography

Jacob Sorber is a Professor and Chair of the Computer Science Division of Clemson University’s School of Computing. His work makes mobile sensors and embedded systems more efficient, robust, deployable, and secure, by exploring novel systems (both hardware and software) and languages. His research has received support by the National Science Foundation (including a CAREER Award), the Dept. of Energy, the US Geological Survey, General Electric, and other sources. He works on problems in health, biology, agriculture, and manufacturing. Before joining Clemson, he was a postdoctoral researcher at Dartmouth College, a graduate student at UMass Amherst, and an undergraduate student at BYU.

Donuts will be served!

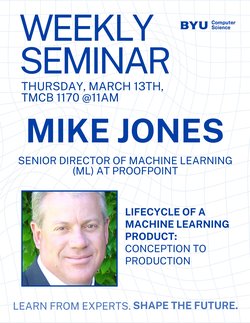

March 10, 2025

Details

Where: TMCB 1170

When: March 13th, 2025 @ 11:00 AM

Speaker: Mike Jones

Talk Title

Lifecycle of a Machine Learning Product: Conception to Production

Abstract

Building machine learning products that are both effective and scalable requires more than just model development—it’s about mastering the entire lifecycle, from conception to deployment. In this talk, we’ll break down the essential principles for creating successful ML systems, starting with a deep understanding of the business problem and how the product will provide real value to customers. We’ll discuss best practices, key trade-offs in system design, and how to balance the needs of software development with the demands of machine learning. Finally, we’ll explore how to monitor and maintain deployed systems to ensure they continue to evolve and meet business needs over time.

Join us to learn actionable strategies for building ML products that not only address immediate goals but also grow and scale, delivering lasting business value. For the future professionals in the audience, there will be a little career advice sprinkled throughout the talk as well.

Biography

Dr. Mike Jones is an experienced leader and innovator in the field of machine learning, with over 30 years of expertise spanning both academic and industry roles. He earned a B.S. in Computer Science from Brigham Young University and an M.S. and Ph.D. in Computer Science from the University of Colorado at Boulder. Currently serving as Senior Director of Machine Learning (ML) at Proofpoint, Mike oversees a team of data scientists and engineers developing AI-driven products across security, data protection, and compliance. Mike has built and led cutting-edge ML systems at organizations such as Symantec, FamilySearch, and Mobile Productivity, specializing in scalable data pipelines, generative AI, and large-scale machine learning applications. His work has had a significant impact across industries, from security to genealogy, and he has authored several research publications in machine learning and information retrieval. A passionate mentor, Mike fosters the professional development of his teams, shaping the next generation of ML leaders and driving innovative AI research and development initiatives.

February 27, 2025

Details

When: March 6th @ 11am

Where: TMCB 1170

Speaker: Chen Zhao

Talk Title

Advances in Fairness and Robustness in Machine Learning Under Distribution Shifts

Abstract

In today's dynamic environments, machine learning models are increasingly deployed in contexts where data distributions evolve over time, posing significant challenges for both fairness and robustness. This talk explores cutting-edge methodologies designed to address these challenges, with a focus on supervised fairness-aware learning under distribution shifts. I will present novel frameworks that disentangle domain-specific and semantic information, enabling models to generalize across domains while maintaining fairness. Additionally, the discussion will highlight the impact of various types of distribution shifts—such as covariate, label, and concept shifts—on model performance and fairness. By leveraging fairness-aware optimization techniques, we aim to mitigate biases in dynamic data environments, ensuring ethical and equitable decision-making. The insights shared in this talk are drawn from both theoretical developments and empirical validations, underscoring the importance of fairness in machine learning in real-world applications, including hiring, healthcare, and financial services.

Biography

Dr. Chen Zhao is an Assistant Professor in the Department of Computer Science at Baylor University. Prior to joining Baylor, he was a senior R\&D computer vision engineer at Kitware Inc. He earned his Ph.D. degree in Computer Science from the University of Texas at Dallas in 2021. His research focuses on machine learning, data mining, and trustworthy AI, particularly fairness-aware machine learning, novelty detection, uncertainty quantification and domain generalization. His publications have been accepted and published in premier conferences, including KDD, CVPR, IJCAI, AAAI, WWW, etc. Dr. Zhao served as program committee members of top international conferences, such as KDD, NeurIPS, IJCAI, ICML, AAAI, ICLR, etc. He has organized and chaired multiple workshops on topics of Ethical AI, Uncertainty Quantification, Distribution Shifts, and Trustworthy AI for Healthcare at KDD (2022, 2023, 2024), AAAI (2023), and IEEE BigData (2024). He serves as the co-chair of the Challenge Cup of the IEEE Bigdata 2024 conference and tutorial co-chair for the Pacific-Asia Conference on Knowledge Discovery and Data Mining 2025.

February 24, 2025

Details

Where: TMCB 1170

When: February 27th, 2025

Speaker: Nathan Trewartha

Talk Title

Building Vast Worlds: Crafting Environmental Art in Lego Fortnite

Abstract

At the intersection of creativity and gameplay, the art of environmental design plays a pivotal role in shaping immersive game experiences. This talk delves into the intricate process of crafting vast, dynamic worlds through the procedurally generated system that Lego Fortnite employs. Participants will explore the foundational principles of environmental art, from concept development to final execution, including the unique challenges and opportunities presented by this hybrid universe.

Attendees will gain insights into the artistic techniques used to create rich, engaging landscapes that reflect both the whimsical nature of Lego and the vibrant aesthetics of Fortnite. Key topics will include designing interactive spaces, utilizing color theory to evoke emotion, and balancing functionality with visual storytelling within a modular framework. The discussion will also touch on the collaborative efforts between artists, designers, and world builders and how these interactions influence the final player experience.

Join us for an inspiring session that not only showcases the beauty of environmental game art but also empowers creators to think beyond traditional boundaries, showcasing how creativity and problem solving can transform games into intriguing worlds. Whether you're an artist, a gamer, or a fan of both worlds, this talk promises to ignite your imagination and elevate your understanding of the complexities and challenges that go into creating art for games and how to solve some of those challenges.

Biography

Nathan Trewartha is an Art Lead at Epic Games, currently working on Lego Fortnite. With a lifelong passion for illustration, he spent his elementary and high school years drawing and painting—sometimes to the dismay of his teachers.

He pursued his artistic talents at Brigham Young University, earning a Bachelor of Fine Arts (BFA) in illustration, followed by a Master of Fine Arts (MFA) from Utah State University. His career in game development began at Glyphics, a Utah-based studio, where he created characters and contributed to a Green Army man game, gaining experience in texture mapping, animation, and lighting.

Trewartha later co-founded Chair Entertainment with a group of BYU friends, a venture that led to the studio’s acquisition by Epic Games during the production of Shadow Complex. Since joining Epic, he has worked on Shadow Complex, the Infinity Blade trilogy, and now leads artistic efforts on Lego Fortnite.

February 18, 2025

Details

When: February 20th, 2025 @ 11am

Where: TMCB 1170

Speaker: Chris Harvey

Talk Title

The story of shading in film and games

Abstract

Shading is a vital part of the process that brings compelling visuals to our movies and games, but what is it exactly and how as it evolved over the last 30 years. We’ll delve into the technology, past present and future. We’ll touch on how video games have evolved at a breakneck pace seeking ever more realistic visuals, and how this art continues to evolve to create ever more engaging imagery.

Biography

Chris Harvey is a technical artist in Meta’s Reality Labs organization. There he combines his technical and artistic talents to further Meta’s goals of building customizable avatars for users across the world. After graduating from BYU with a BFA in animation he spent the next 20 years working in visual effects, software development and animation. He as worked in both artistic and technical roles and believes that there is always more to learn.

February 06, 2025

Details

Where: TMCB 1170

When: February 13th, 2025

Speaker: Marianna J. Martindale

Talk Title

Risks and Mitigations for Machine Translation Enabled Triage in Intelligence Analysis

Abstract

The perception of high Machine Translation (MT) quality, based largely on performance translating between high resource languages, has led to its adoption for a variety of use cases. However, MT is still imperfect and can even be misleading in its errors. Government use cases such as those involving law enforcement or intelligence are uniquely risky because government action or inaction based on incorrect information can cause harm to individuals or national security. One such use case is MT for triage in intelligence analysis. In this talk, I will first introduce the MT-enabled triage use case and some of the risks in the context of intelligence analysis as well as U.S. intelligence community policies implicated by this use case that may partially mitigate these risks. I will then present a user study with intelligence analysts to measure their baseline performance on this type of MT-enabled triage task and the effectiveness of providing additional translation outputs as a practical intervention to further mitigate risk.

Biography

Marianna J. Martindale received her PhD in Information Studies from the University of Maryland in August 2024 after previously receiving her BS in Computer science from BYU (2003) and MS in Linguistics (Computational) from Georgetown University (2010). Since 2003, she has worked for the US Government supporting, building, and deploying machine translation systems. She is currently serving as the secretary on the board of the Association for Machine Translation in the Americas. Her research interests include user-centered machine translation evaluation and how to help users calibrate their trust in generative AI model outputs.

February 03, 2025

Details

In place of our weekly seminar, we will be hosting our annual 3MT competition. Three graduate students will have three minutes to present their thesis and the winner will go on to compete at the university level.

Where: TMCB 1170

When: 11am

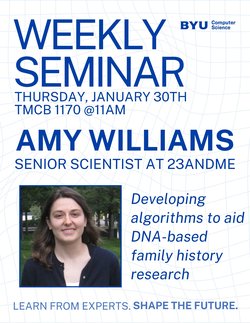

January 24, 2025

Details

When: January 30th, 2025

Where: TMCB 1170

Speaker: Amy Williams

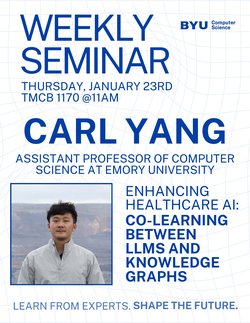

January 22, 2025

Details

When: January 23rd, 2025

Where: TMCB 1170

Speaker: Carl Yang

January 15, 2025

Details

When: Thursday, January 16th, 2025\

Where: TMCB 1170

Speaker(s): Alyssa Stringham, Zando Ward, Russ Young, Sarah Wiley

December 19, 2024

Luke 2:10-11, 13-14

And the angel said unto them, Fear not: for, behold I bring you good tidings of great joy, which shall be to all people. For unto you is born this day … a Saviour, which is Christ the Lord ... And suddenly there was with the angel a multitude of the heavenly host praising God, and saying, Glory to God in the highest, on earth peace, good will toward men.

Merry Christmas from our families to yours.

December 04, 2024

Where

TMCB 1170

When

December 5th, 2024

Talk Title

7 Principles of Success – Insights from 40 years in tech

Abstract

After nearly four decades in tech, I have learned lessons that go beyond writing code or managing systems. I have chosen seven of these lessons, expressed as principles, which I consider the most important not only to our careers but also to our lives. I will share stories and observations from the trenches -- both the wins and the face-palm moments -- that shaped these insights. My goal is to share these ideas in ways that will help you navigate your own professional and personal journeys.

Biography

Peter Whiting is the Church’s Chief Technology Officer and leads the Technology and Platform Services Division within the Church of Jesus Christ of Latter-day Saints. In these roles Peter defines the overarching technical direction of the Church and guides the teams responsible for the underlying technology used by the Church. Peter brings 35 years of diverse technical experience into this role: undersea signal processing, global internet network design and operation, and managed IT outsourcing. He is passionate about leveraging technology to improve the lives of those who live in under-resourced communities. Through extensive world travel, Peter has observed their challenges as well as their aptitudes. Accordingly, his technology interests are focused on enabling their success. Prior to working for the Church, Peter was Sprint’s Chief Technologist responsible for their internet business unit as the Internet transitioned from a government-controlled network to a commercial entity. Before that, he was a research scientist at IBM working on the US Navy’s undersea acoustic platforms. Peter earned a PhD from the University of Kansas in 2001. His dissertation dealt with applying the principles of machine learning and artificial intelligence to network protocol design.

November 18, 2024

Details

November 21st

TMCB 1170 @ 11:00 AM

Talk Title

Toward Decision Making in the Real World: From Trustworthy to Actionable

Abstract

Reinforcement learning (RL) has achieved success in areas such as gaming, robotics, and language models, sparking curiosity about its applicability in the real world. When applying RL to real-world decision-making, challenges arise related to data and models. We will discuss the implications of these challenges on the feasibility of RL and share preliminary efforts to address them. These efforts include developing realistic simulators and bridging the gap between simulation and the real world through uncertainty quantification and the use of language models.

Biography

Hua Wei is an assistant professor at the School of Computing and Augmented Intelligence (SCAI) in Arizona State University (ASU). He got his PhD from Pennsylvania State University in 2020. He specializes in data mining, artificial intelligence and machine learning. He has been awarded the Best Paper at ECML-PKDD 2020, and his students and his own research work have been published in top conferences and journals in the fields of machine learning, artificial intelligence, data mining, and control (NeurlPS, AAAI, CVPR, KDD, IJCAI, ITSC, ECML-PKDD, WWW). His research has been funded by NSF, DoE and DoT.

November 07, 2024

When: November 14th @ 11am

Where: TMCB 1170

Talk Title: Predicting Liver Segmentation Model Failure with Feature-Based Out-of-Distribution Detection and Generative Adversarial Networks

Advanced liver cancer is often treated with radiotherapy, which requires precise liver segmentation. While deep learning models excel at segmentation, they struggle on image attributes not seen during training. To ensure quality care for all patients, my research focuses on automated, scalable, and interpretable solutions for detecting liver segmentation model failures. In this talk, I will first present accurate and scalable solutions that utilize model features extracted at inference. I will then introduce generative modeling for the localization of novel information, an approach that integrates interpretability into the detection pipeline.

October 31, 2024

When: November 7th @ 11am

Where: TMCB 1170

Talk Title: Reconstructing parental genomes and near perfect hphasing using data from millions of people

The advent of large genotyped cohorts from genetic testing companies and biobanks have opened the door to a host of analyses and implicitly include data for massive numbers of relatives. Genetic relatives share identity-by-descent (IBD) segments they inherited from common ancestors and several methods have been developed to reconstruct ancestors’ DNA from relatives. We present HAPI-RECAP, a tool that reconstructs the DNA of parents from full siblings and their relatives. Given data for one parent, phasing alone with HAPI2 reconstructs large fractions of the missing parent’s DNA, between 77.6% and 99.97% among all families, and 90.3% on average in three- and four-child families. When reconstructing both parents, HAPI- RECAP infers between 33.2% and 96.6% of the parents’ genotypes, averaging 70.6% in four-child families. Reconstructed genotypes have average error rates < 10−3, comparable to those from direct genotyping. Besides relatives, massive genetic studies enable precise haplotype inference. We benchmarked state-of the-art methods on > 8 million diverse, research-consented 23andMe, Inc. customers and the UK Biobank (UKB), finding that both perform exceptionally well. Beagle’s median switch error rate (after excluding single SNP switches) in white British trios from UKB is 0.026% compared to 0.00% for European ancestry 23andMe research participants; 55.6% of European ancestry 23andMe participants have zero non-single SNP switches, compared to 42.4% of white British trios in UKB. SHAPEIT and Beagle excel at ‘intra-chromosomal’ phasing, but lack the ability to phase across chromosomes, motivating us to develop an inter-chromosomal phasing method called HAPTIC (HAPlotype TIling and Clustering), that assigns paternal and maternal variants discretely genome-wide. Our approach uses IBD segments to phase blocks of variants on different chromosomes. We ran HAPTIC on 1022 UKB trio children, yielding a median phase error of 0.08% in regions covered by IBD segments (33.5% of sites) and on 23andMe trio children, finding a median phase error of 0.92% in Europeans (93.8% of sites) and 0.09% in admixed Africans (92.7% of sites). HAPTIC’s precision depends heavily on data from relatives, so will increase as datasets grow larger and more diverse. HAPTIC and HAPI-RECAP enable analyses that require the parent-of-origin of variants, such as association studies and ancestry inference of untyped parents.

October 28, 2024

When: October 31st @ 11am

Where: TMCB 1170

Talk title: Translation and Multilinguality in the Age of Large Language Models

Abstract: We currently witness a convergence in the field of natural language processing into a unifying framework built on foundational language models. This talk traces the development of these models out of the interplay of translation and language model research. Large language models have fundamentally changed how machine translation systems are currently being built. The talk will highlight where these technologies stand, what new capabilities language models enable for translation, and what constitutes best practices when building machine translation systems for deployment. The talk will also show how language models currently struggle to work well inlanguages beyond English and what can be done to address this.

October 24, 2024

Where: TMCB 1170

When: October 24, 2024, 11am

Come meet Nathaniel Bennett, BYU CS grad and current PhD student at University of Florida!